Introduction

We’re announcing the release of ConNER (Concept Named Entity Recognition), a lightweight and efficient model designed to extract concepts from text. The model is optimized for edge devices, with a size of just a few megabytes, making it suitable for running directly on laptops, tablets, or phones without requiring significant computational resources.

Motivation

Extracting concepts from text often relies on large language models that demand substantial computational power and can be slow. ConNER offers a lightweight alternative, specifically trained for concept annotation. It achieves over 90% accuracy on our validation set and is compact enough to run on edge devices, providing real-time predictions.

Use Cases

ConNER can be used as a building block for various applications:

- Analyzing lecture notes and textbooks

- Building concept maps from educational content

- Creating searchable concept indices

- Supporting educational software

- Enhancing educational content management systems

Examples

-

Input:

Microeconomics focuses on individual markets and consumer behavior.

Output:["Microeconomics"] -

Input:

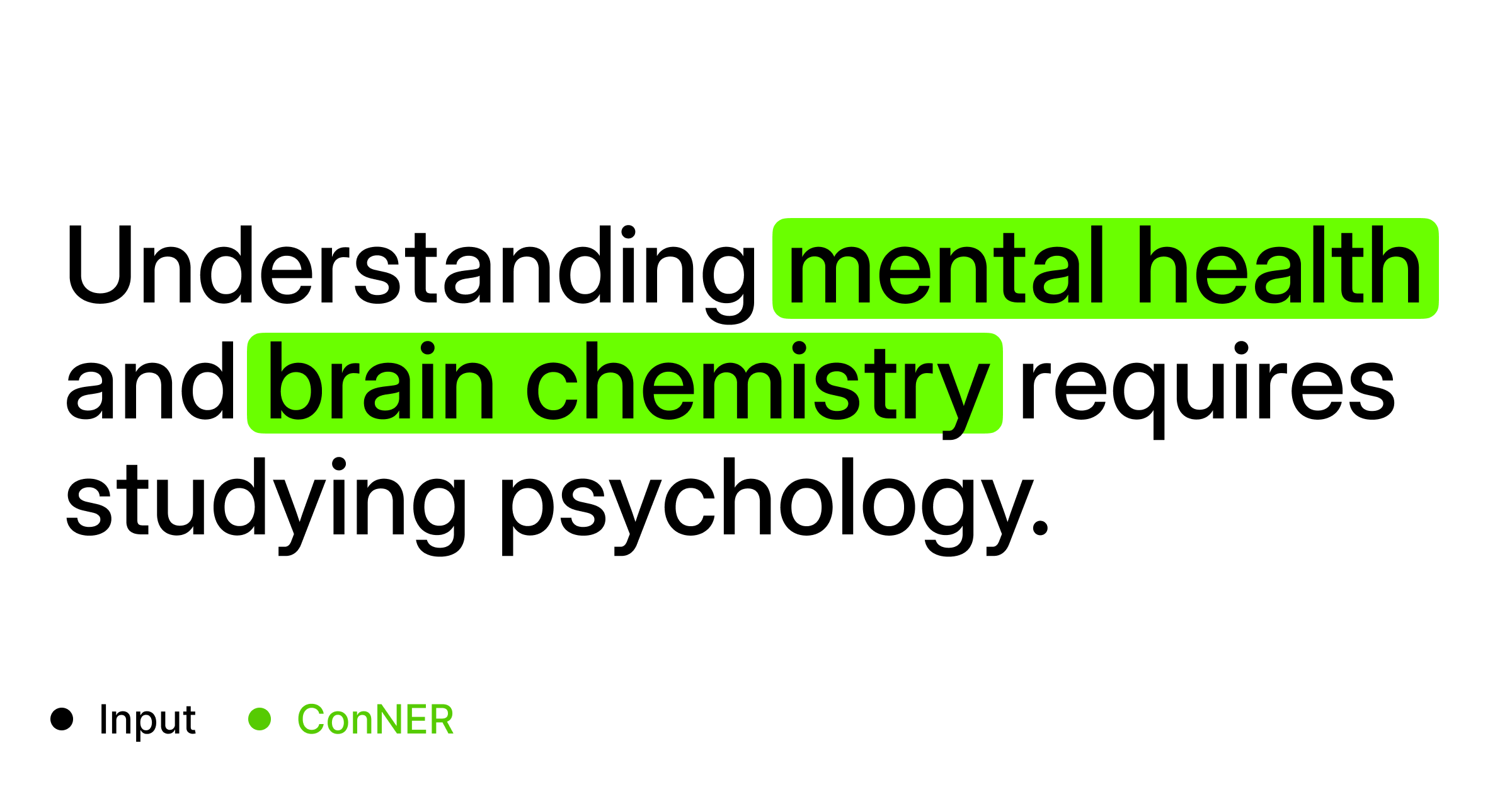

Understanding mental health and brain chemistry requires studying psychology.

Output:["mental health", "brain chemistry"] -

Input:

Machine learning is a subset of artificial intelligence that enables systems to learn from data.

Output:["Machine learning"] -

Input:

The human brain is the most complex organ in the body.

Output:["human brain"]

Technical Details

Architecture

ConNER is built on prajjwal1/bert-tiny with a classification layer for BIO (Beginning, Inside, Outside) tagging. The model:

- Processes sequences up to 128 tokens

- Uses WordPiece tokenization

- Outputs three classes: O (Outside), B-CONCEPT (Beginning), I-CONCEPT (Inside)

- Includes dropout (0.1) for regularization

Training Data

The model was trained on a proprietary dataset of academic content and notes, including:

- Student notebooks with highlighted concept annotations

- OCR-processed handwritten notes

- Synthetic data generated by open-source LLMs

Training Configuration

- Optimizer: Adam (learning rate: 2e-4, weight decay: 0.01)

- Loss:

SparseCategoricalCrossentropy - Batch size: 16

- Epochs: 300

Model Card

Key Information

- Size: 18,6 MB

- Accuracy: over 90% on validation set

- Input: Text sequences up to 128 tokens

- Output: List of extracted academic concepts

- Platform: Runs on CPU, optimized for edge devices

Limitations

- English text only

- Best performance on academic/educational content

- Maximum sequence length of 128 tokens

- The model sometimes misses complex multi-word concepts, especially in definitional contexts

- Occasional false positives on pronouns and general terms

- Performance varies based on sentence structure and context

- Some domain-specific concepts might be missed depending on training data coverage

- Best performance on clear, direct academic writing

Example Limitations

-

Input:

In psychology, cognitive dissonance describes the mental stress from holding contradictory beliefs.Output (Misses “cognitive dissonance” as a concept):

[] -

Input:

It can be found by adding horizontally the individual supply curves.Output (Incorrectly labels pronoun as concept):

["It"]

Downloads

- Pre-trained model (18,6 MB), last updated on November 28, 2024